I just recently started a new job as Developer Educator at PlanetScale, a serverless, MySQL-compatible database platform. A big part of my role at the company is creating resources to help other developers understand the platform and more easily build stuff around it. I’ve worked with MySQL over my years as a developer but still need to get familiar with PlanetScale as a platform. So as a way to learn it better, I decided to port the backend of my Saas GuardianForge over to PlanetScale.

At the end of this article, I’ll drop a link to the GitHub branch that all of this work is being done on for research purposes.

The Goal

The end goal of this exercise is to cut over a few of the database operations from DynamoDB to PlanetScale, particularly around Builds which are the primary entities of GuardianForge. I’ll be updating the following operations:

- Creating a Build (Create)

- Updating a Build (Update & Read One)

- Archive a Build (Delete)

- Getting the Latest Builds (Read Many)

All of this currently happens in a single table, so no joins will be taken into consideration, but it tests all of the standard CRUD logic that applies to database platforms.

As info, CRUD is an acronym in the data world that stands for Create, Read, Update, Delete.

PlanetScale Setup

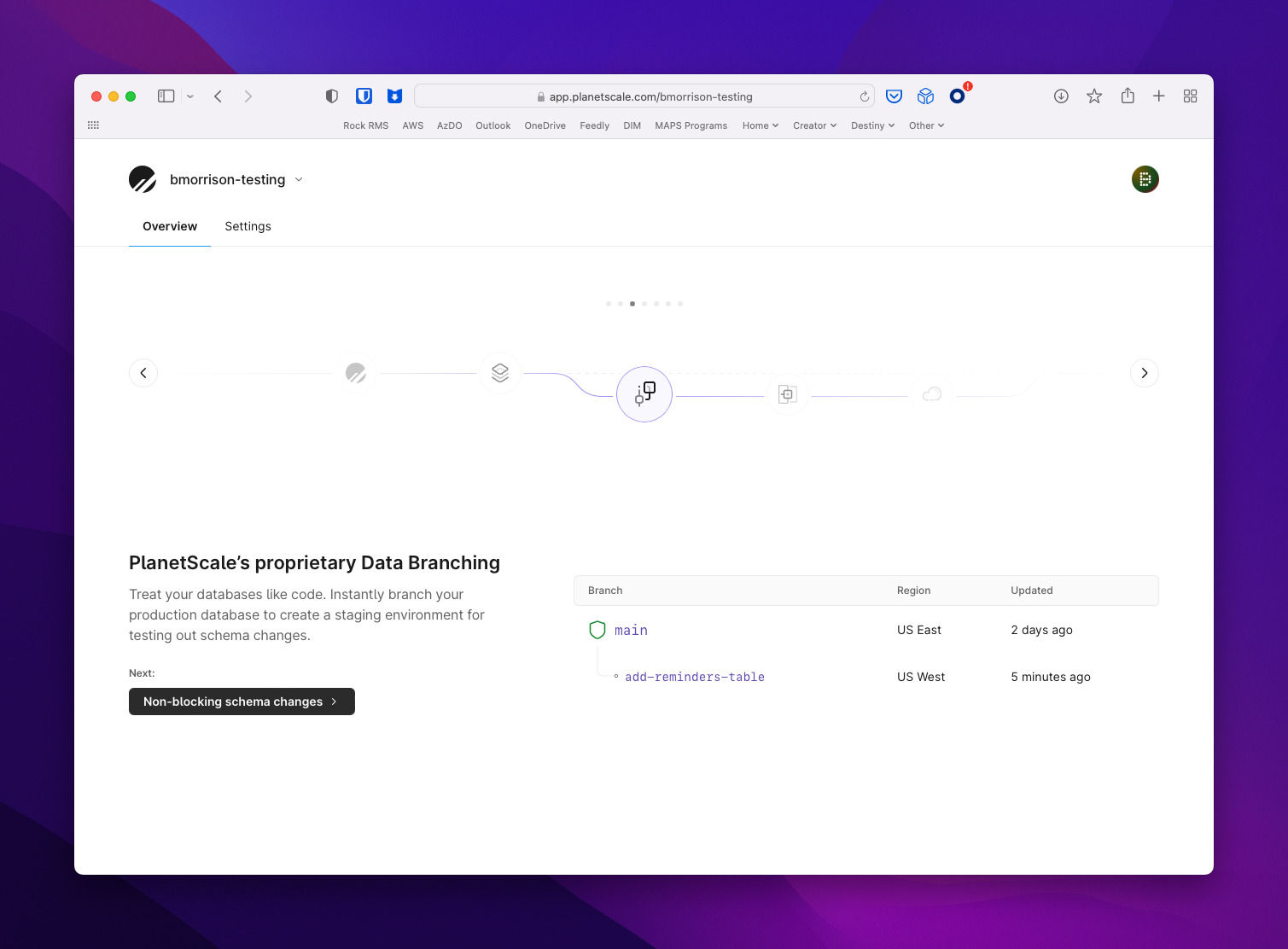

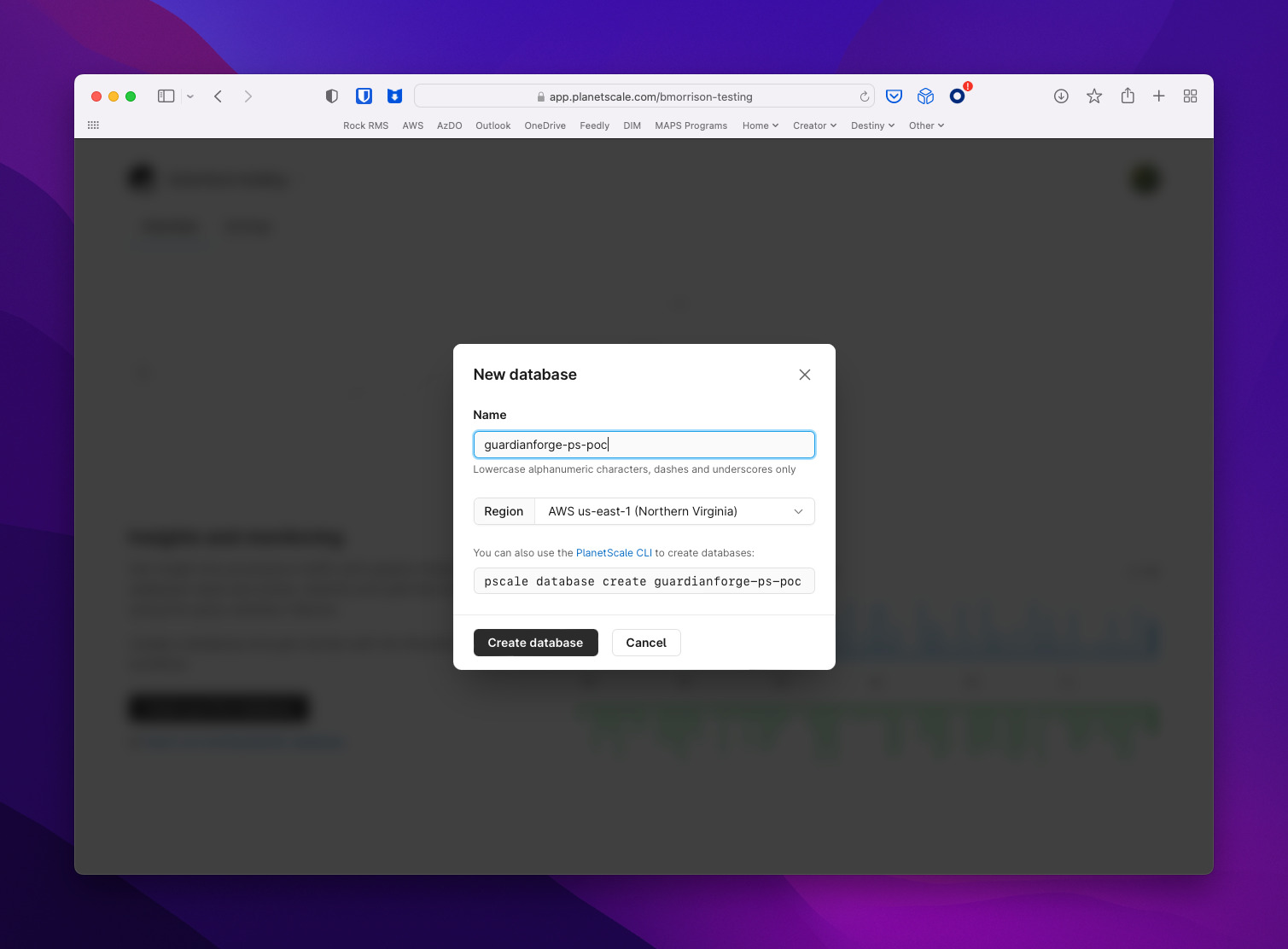

Although I am now employed with PlanetScale, my experience with the platform to this point has been pretty small, so I’m approaching this from the ground level. I setup a new organization in PlanetScale for testing and was presented with a pretty nice wizard walking me through some of the PlanetScale basics. Branching is one of the core features as I understand, so its pretty front & center in the wizard. At the end of this, I was prompted to create a database.

The view I'm presented with upon signing in for the first time.

Details about branching & how it works.

The last view of the wizard, prompting me to create a database.

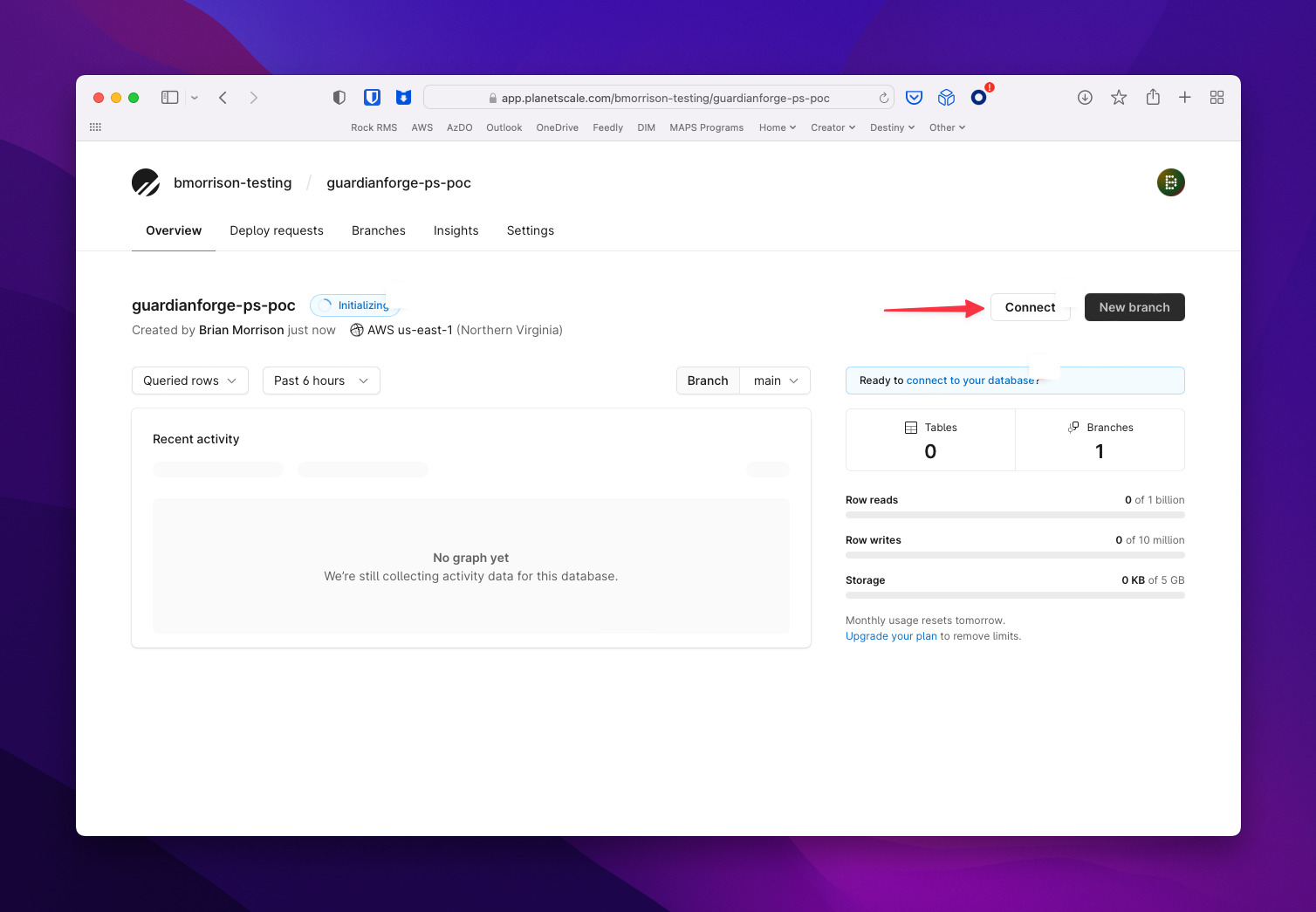

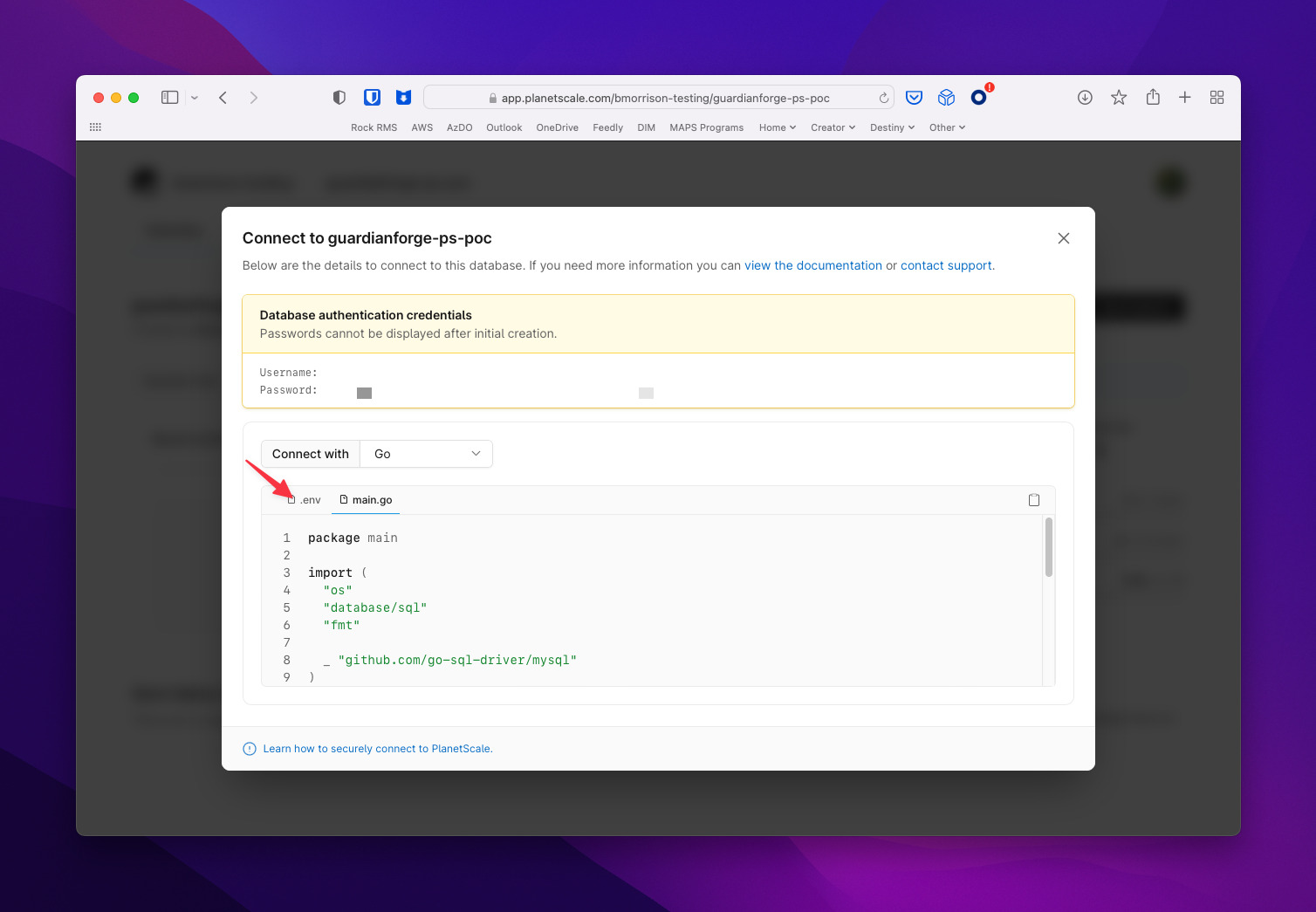

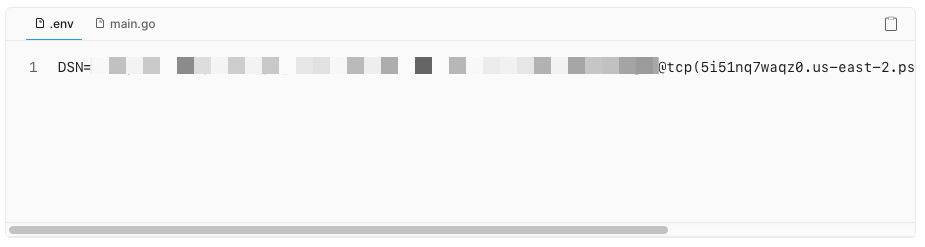

Once my DB was created, I was able to grab my connection string using the button in the first image below. It’s a standard MySQL connection string for Go.

Clicking the Connect button displays the connection string.

Once opening this view, I had to select the '.env' tab to see the connection string.

And here is what would be used to connect to the database.

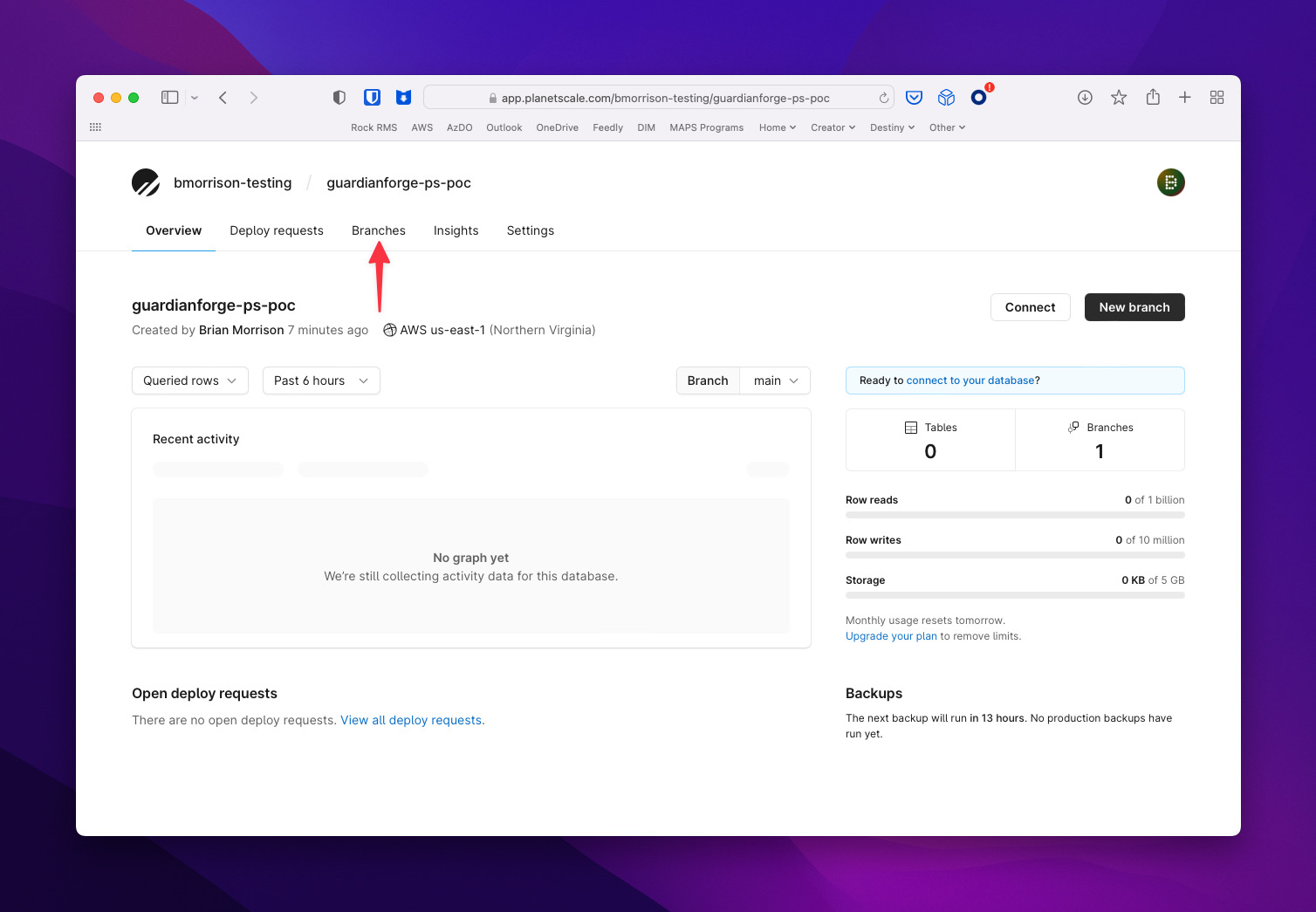

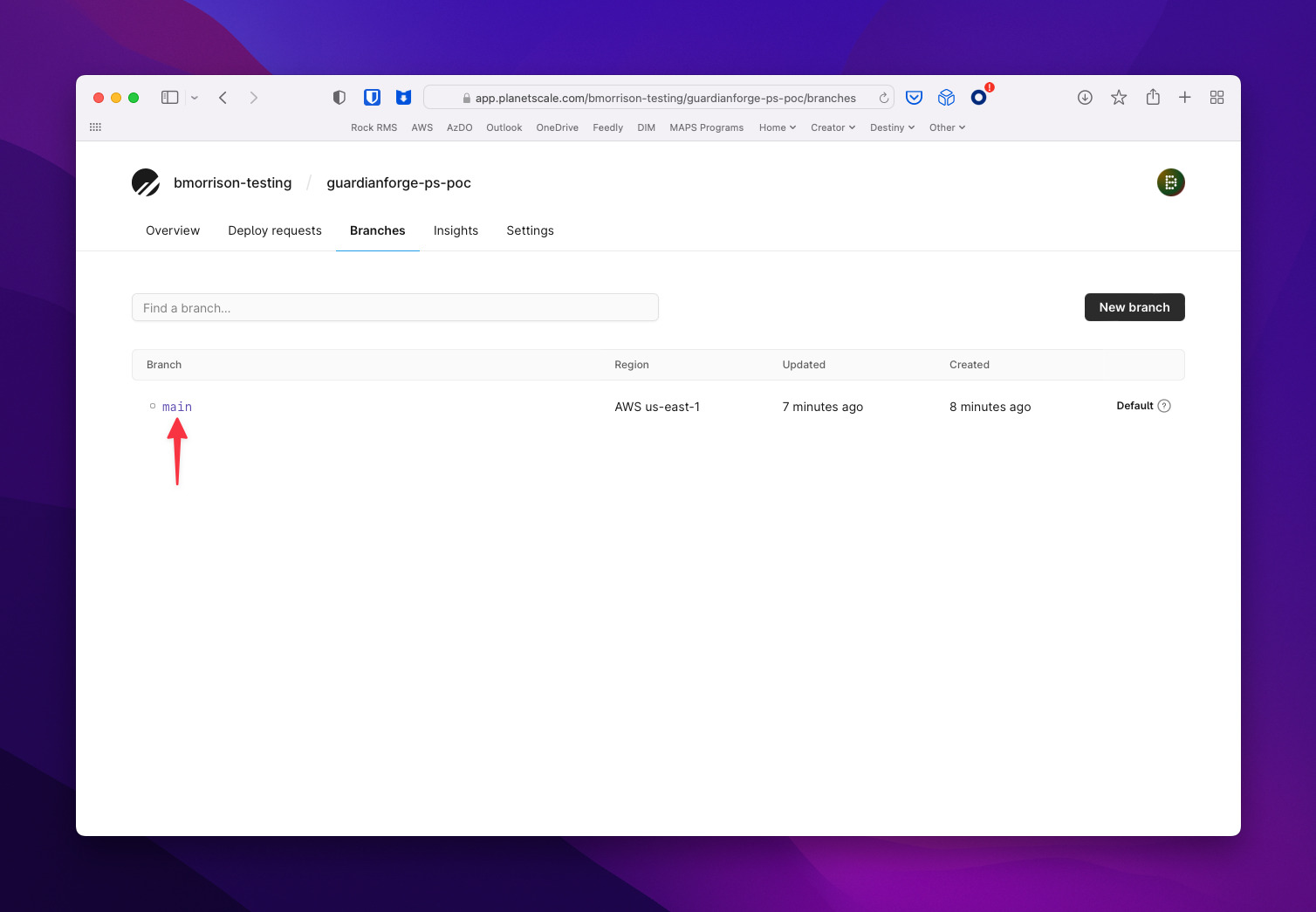

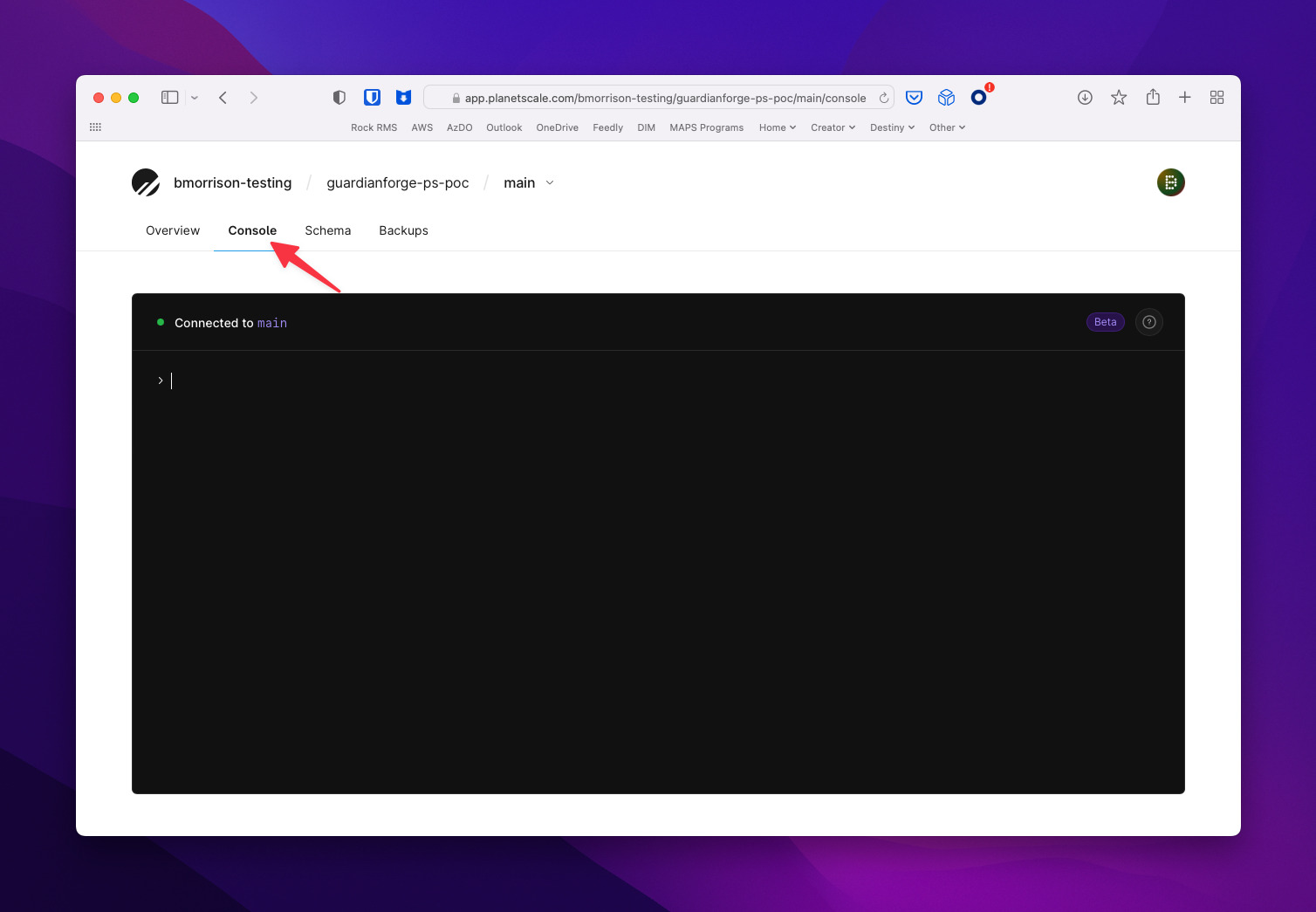

The main branch is created by default, so in order to drop into a web console, I had to navigate to Branches, then select the main branch, then Console.

The Branches tab takes you to a view to manage branches for the database.

Selecting the main branch will drop you into those settings.

Select Console gets you an in-browser terminal to manage the database/branch.

Now before I created my table, I wanted to review the existing Build struct in the code to determine what fields were necessary to store in PlanetScale. I store only meta data about a build in the database, whereas the bulk of the data is in S3. Below are the structs that make up a Build. The BuildSummary is included since its stored as JSON in Dynamo and I wanted to abstract some of the fields to store them directly in the table.

type Build struct {

// DynamoDB Partition Key

EntityType string `json:"entityType" dynamodbav:"entityType"`

// DynamoDB Sort Key

Id string `json:"entityId" dynamodbav:"entityId"`

PublishedOn int64 `json:"publishedOn" dynamodbav:"publishedOn"`

CreatedById string `json:"createdById" dynamodbav:"createdById"`

IsPrivate bool `json:"isPrivate" dynamodbav:"isPrivate"`

SearchKey string `json:"searchKey" dynamodbav:"searchKey"`

Summary BuildSummary `json:"summary" dynamodbav:"summary"`

Upvotes int `json:"upvotes" dynamodbav:"upvotes"`

SeasonalUpvotes map[string]int `json:"seasonalUpvotes" dynamodbav:"seasonalUpvotes"`

}

type BuildSummary struct {

UserId string `json:"userId" dynamodbav:"userId"`

Username string `json:"username" dynamodbav:"username"`

Highlights []string `json:"highlights" dynamodbav:"highlights"`

Name string `json:"name" dynamodbav:"name"`

PrimaryIconSet string `json:"primaryIconSet" dynamodbav:"primaryIconSet"`

// Non DynamoDB Fields

PublishedOn *int64 `json:"publishedOn"`

BuildId *string `json:"id"`

Activity *int `json:"activity"`

Upvotes *int `json:"upvotes"`

SeasonalUpvotes *map[string]int `json:"seasonalUpvotes"`

}

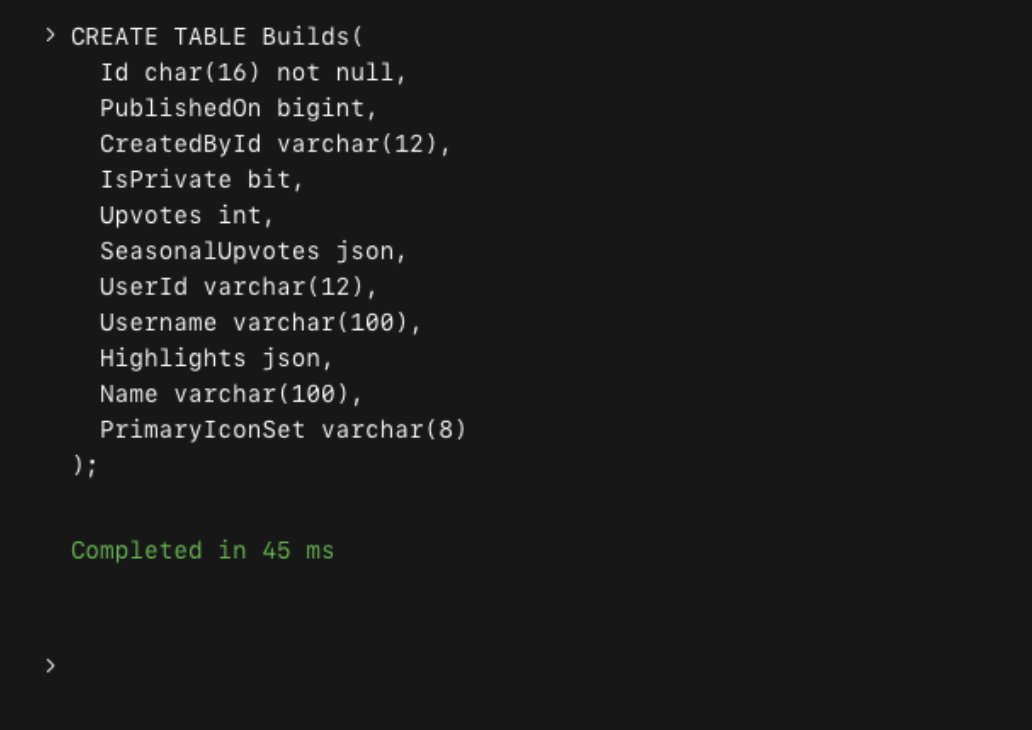

So using the structs above, I came up with this script to create the Builds table.

The initial script I used to create the Builds table.

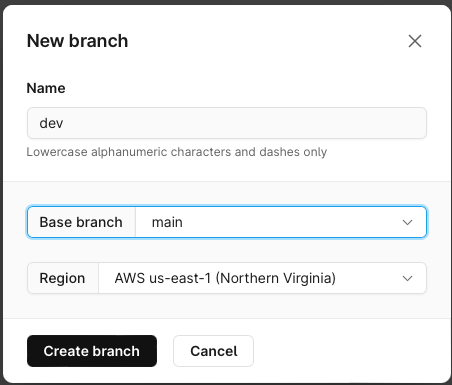

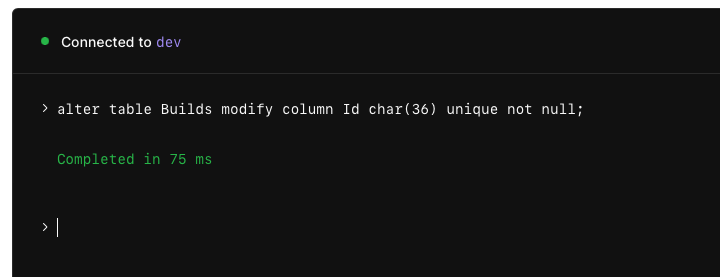

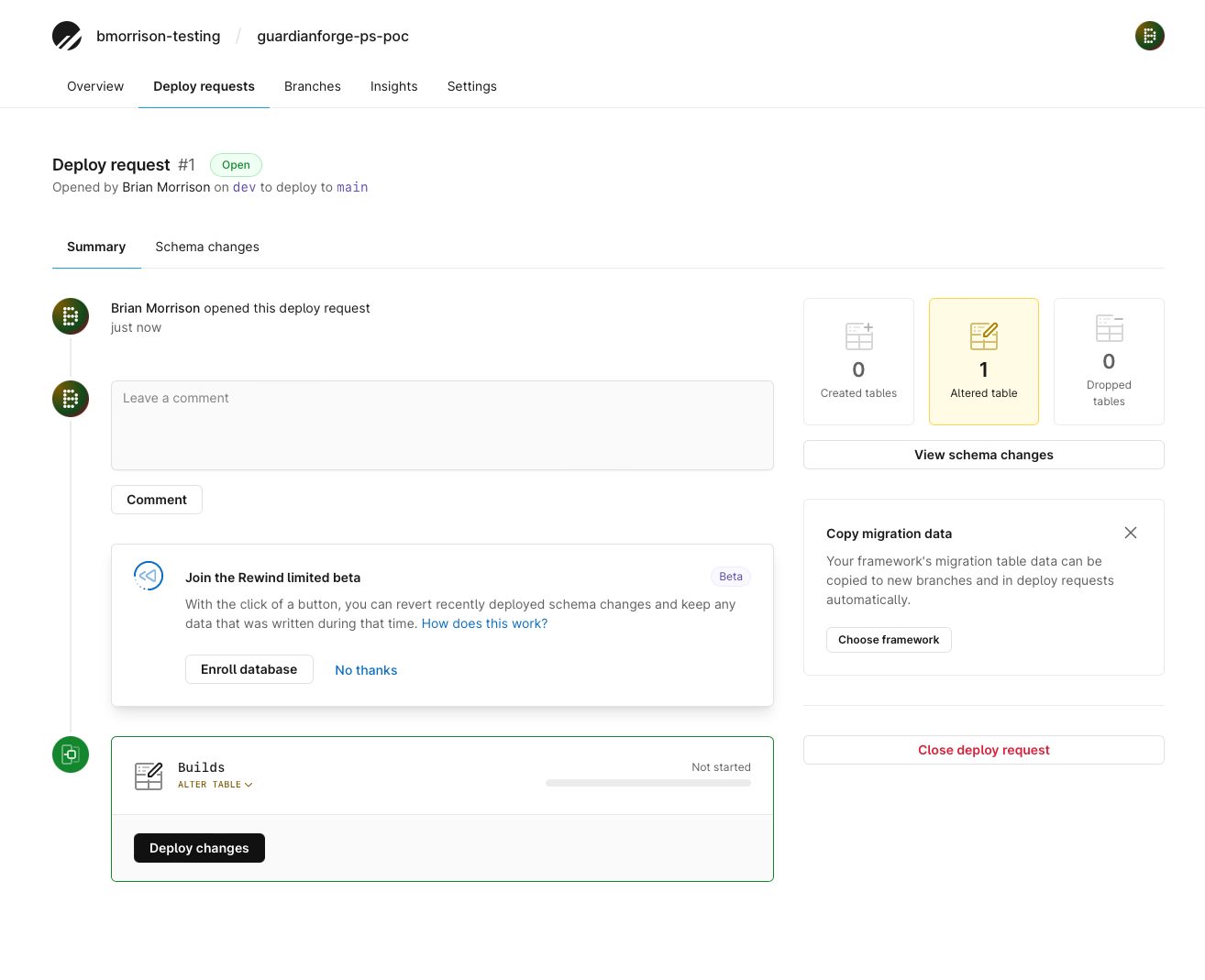

The console took the script no problem, but when I tried to promote my main branch to be the production DB, an error was thrown since I didn’t flag my Id column with the unique keyword. PlanetScale doesn’t let you perform schema updates on the production branch, so I needed to create a new branch, make the changes there, and merge them into main. I also changed the Id column type to make room for more data (a mistake in my original script).

The New branch modal.

This was the script I used to fix the table before I could work with it.

This is the view to 'merge' branches in PlanetScale.

Now that my table has been created, I was ready to start building in my Go backend.

Creating a Build

Instead of seeing data from Dynamo, I decided to start with the logic around creating builds so I can insert data into the table as if it was coming from the UI. I started by creating a new file called planetscale.go to encapsulate all of my logic for working with the service. I added a simple connect method to the file that can be called from each function that runs. I’m using the connection string provided when I created the database and storing it as the PS_CONN_STR environment variable. Since this is all in a serverless environment (AWS Lambda) I don’t need to worry too much about checking whether or not a connection already exists.

// backend/core/services/planetscale.go

var psdb *sql.DB

func connect() error {

_psdb, err := sql.Open("mysql", os.Getenv("PS_CONN_STR"))

if err == nil {

psdb = _psdb

}

return err

}

Then I created a CreateBuild function, that will write data to PlanetScale as the API is hit. What’s important to note here is that this is all standard MySQL code in Go. There is nothing necessary native to PlanetScale going on here, which is pretty neat.

// backend/core/services/planetscale.go

func CreateBuild(buildRecord dbModels.Build) error {

err := connect()

if err != nil {

return errors.Wrap(err, "(CreateBuild) connect")

}

// This is just a method to flatten the Build & BuildSummary structs

psbuild := buildRecord.ToPSBuild()

query := `INSERT INTO Builds(Id,

PublishedOn,

CreatedById,

Upvotes,

SeasonalUpvotes,

UserId,

Username,

Highlights,

Name,

PrimaryIconSet) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?)`

stmt, err := psdb.Prepare(query)

if err != nil {

return errors.Wrap(err, "(CreateBuild) Prepare")

}

defer stmt.Close()

_, err = stmt.Exec(

psbuild.Id,

psbuild.PublishedOn,

psbuild.CreatedById,

psbuild.Upvotes,

psbuild.SeasonalUpvotes,

psbuild.UserId,

psbuild.Username,

psbuild.Highlights,

psbuild.Name,

psbuild.PrimaryIconSet,

)

if err != nil {

return errors.Wrap(err, "(CreateBuild) Exec")

}

}

Now in the Lambda function that actually handles the POST /builds request, I just commented out the code that hit Dynamo and added the necessary code to hit the new function above.

// backend/stack/api/builds/_post/main.go

// err = services.PutBuildToDynamo(sess, dbBuild)

// if err != nil {

// return utils.ErrorResponse(err, "(handler) put build to dynamo")

// }

err := services.CreateBuild(dbBuild)

if err != nil {

return utils.ErrorResponse(err, "(handler) CreateBuild")

}

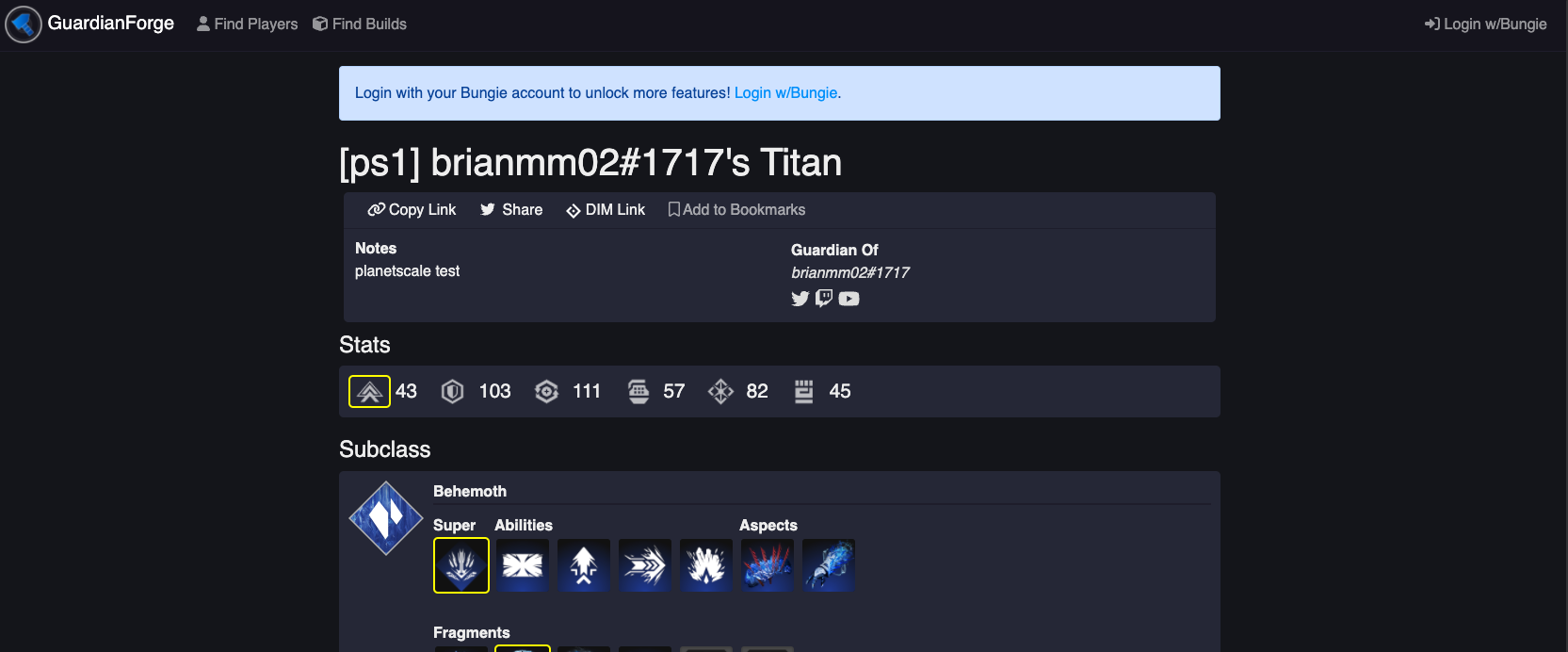

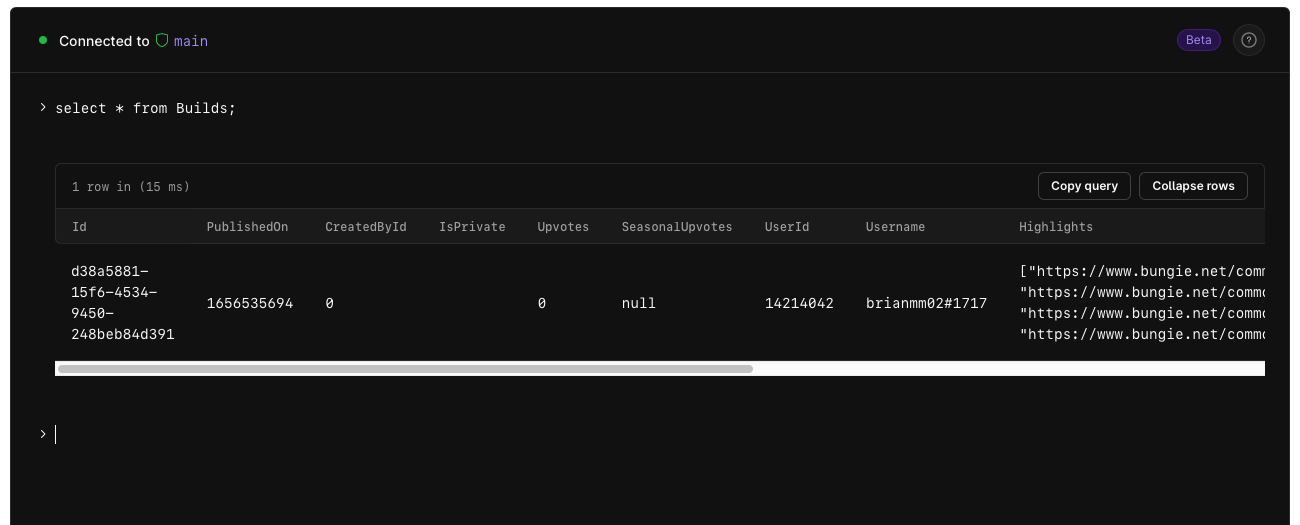

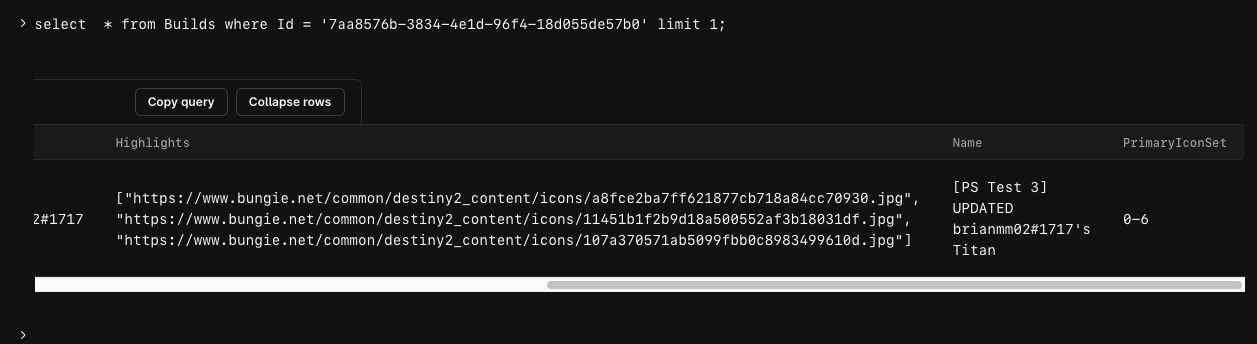

And we had a successful build created! Below is the UI from when the build was created followed by a select statement from within the PlanetScale web console on the main branch (this needed to be turned on in my DB settings, otherwise it’s disabled by default).

The view after a build was created.

The data in PlanetScale.

Updating a Build

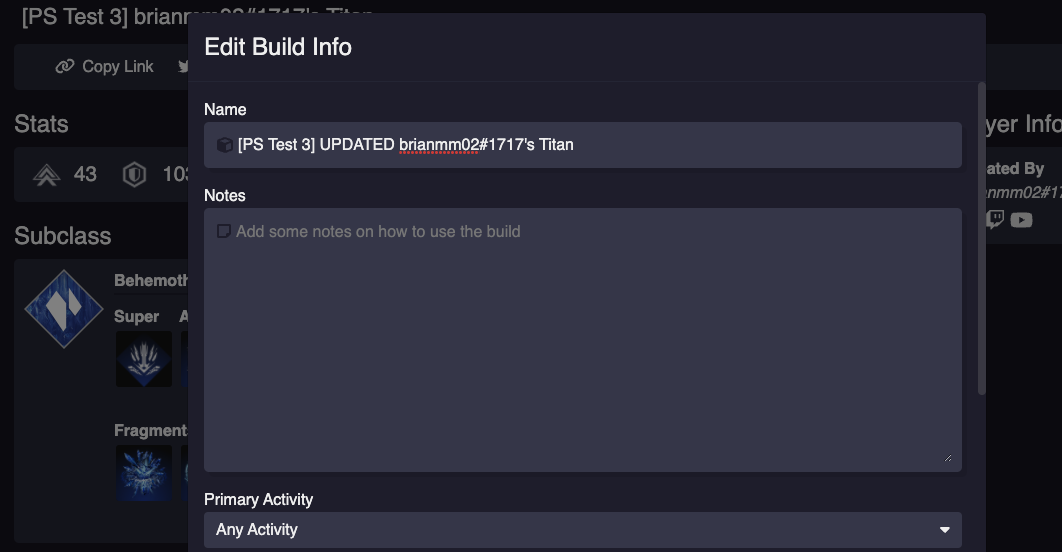

Certain fields in a Build are allowed to be updated at a future date. Most of that data is stored in the S3 document but the build name is stored in the database, so that’s the only field we’ll target here.

I updated my planetscale.go and added the following function. As you can see it’s very similar to the CreateBuildfunction, just with a different query and slightly different parameters. Honestly most of the functions written will look very similar, which is just the nature of working with a database.

// backend/core/services/planetscale.go

func UpdateBuild(buildId string, buildRecord dbModels.Build) error {

err := connect()

if err != nil {

return errors.Wrap(err, "(UpdateBuild) connect")

}

psbuild := buildRecord.ToPSBuild()

query := `UPDATE Builds SET Name = ? WHERE Id = ?`

stmt, err := psdb.Prepare(query)

if err != nil {

return errors.Wrap(err, "(UpdateBuild) Prepare")

}

defer stmt.Close()

_, err = stmt.Exec(

psbuild.Name,

buildId,

)

if err != nil {

return errors.Wrap(err, "(UpdateBuild) Exec")

}

return nil

}

I updated the code in the PUT function that performs updates on the builds. Notice there is also a call to grab the record from Dynamo first to ensure it exists before trying to update anything. Since I cut over the functionality to create builds to PlanetScale, that data won’t exist in Dynamo, so I needed to create the function to fetch a single build from PlanetScale.

// backend/core/services/planetscale.go

func PSFetchBuildById(buildId string) (*dbModels.Build, error) {

err := connect()

if err != nil {

return nil, errors.Wrap(err, "(PSFetchBuildById) connect")

}

query := "SELECT * FROM Builds WHERE Id = ? LIMIT 1"

stmt, err := psdb.Prepare(query)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchBuildById) Prepare")

}

defer stmt.Close()

build := dbModels.Build{

Summary: dbModels.BuildSummary{},

}

var isPrivate *bool

var seasonalUpvotesString string

var highlightsString string

err = stmt.QueryRow(buildId).Scan(

&build.Id,

&build.PublishedOn,

&build.CreatedById,

&isPrivate,

&build.Upvotes,

&seasonalUpvotesString,

&build.Summary.UserId,

&build.Summary.Username,

&highlightsString,

&build.Summary.Name,

&build.Summary.PrimaryIconSet,

)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchBuildById) execQuery")

}

if isPrivate != nil && *isPrivate == true {

build.IsPrivate = true

}

if seasonalUpvotesString != "" {

err = json.Unmarshal([]byte(seasonalUpvotesString), &build.Summary.Highlights)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchBuildId) unmarshal seasonal upvotes")

}

}

if highlightsString != "" {

err = json.Unmarshal([]byte(highlightsString), &build.Summary.Highlights)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchBuildId) unmarshal highlights")

}

}

return &build, err

}

Here is the updated code in the PUT function that does everything it needs to with PlanetScale over Dynamo.

// backend/stack/api/builds/_put/main.go

// Fetch from Dynamo, confirm userId matches

// record, err := services.FetchBuildById(buildId)

record, err := services.PSFetchBuildById(buildId)

if err != nil {

return utils.ErrorResponse(err, "(updateBuild) FetchBuildsById")

}

if record.CreatedById != *membershipId {

return utils.UnauthorizedResponse(nil)

}

if requestModel.Name != nil {

// sess, err := session.NewSession()

// if err != nil {

// return utils.ErrorResponse(err, "(updateBuild) Creating AWS session to update Dynamo record")

// }

record.Summary.Name = *requestModel.Name

// err = services.PutBuildToDynamo(sess, *record)

// if err != nil {

// return utils.ErrorResponse(err, "(updateBuild) Put build to Dynamo")

// }

err = services.UpdateBuild(buildId, *record)

if err != nil {

return utils.ErrorResponse(err, "(updateBuild) Update build in PlanetScale")

}

}

And finally, testing the code all the way through.

Updating the Build in GuardianForge.

The updated build, note the UPDATED in the Name column.

Archiving a Build

Archiving is effectively a DELETE call in the API. It wipes the database records from Dynamo, so I’m doing the same in PlanetScale here. This is easily the simplest of all functions so far, note the simple change in the query variable over the UpdateBuild function.

// backend/core/services/planetscale.go

func DeleteBuild(buildId string) error {

err := connect()

if err != nil {

return errors.Wrap(err, "(DeleteBuild) connect")

}

query := `DELETE FROM Builds WHERE Id = ?`

stmt, err := psdb.Prepare(query)

if err != nil {

return errors.Wrap(err, "(DeleteBuild) Prepare")

}

defer stmt.Close()

_, err = stmt.Exec(buildId)

if err != nil {

return errors.Wrap(err, "(DeleteBuild) Exec")

}

return nil

}

Fetching the Latest Builds

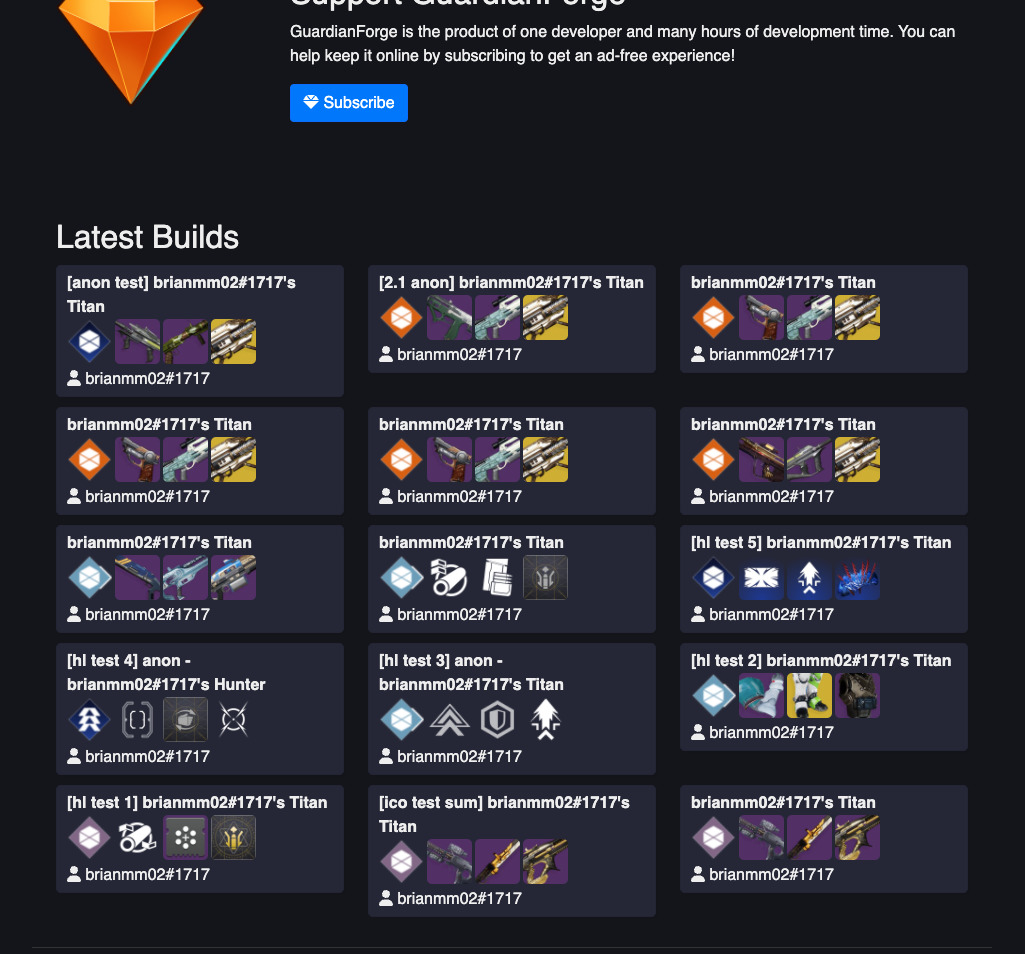

This is what is called whenever someone hits the GuardianForge home page or logs in for the first time. At the bottom of the page, there is a list of the latest 15 builds.

The current Latest Builds view.

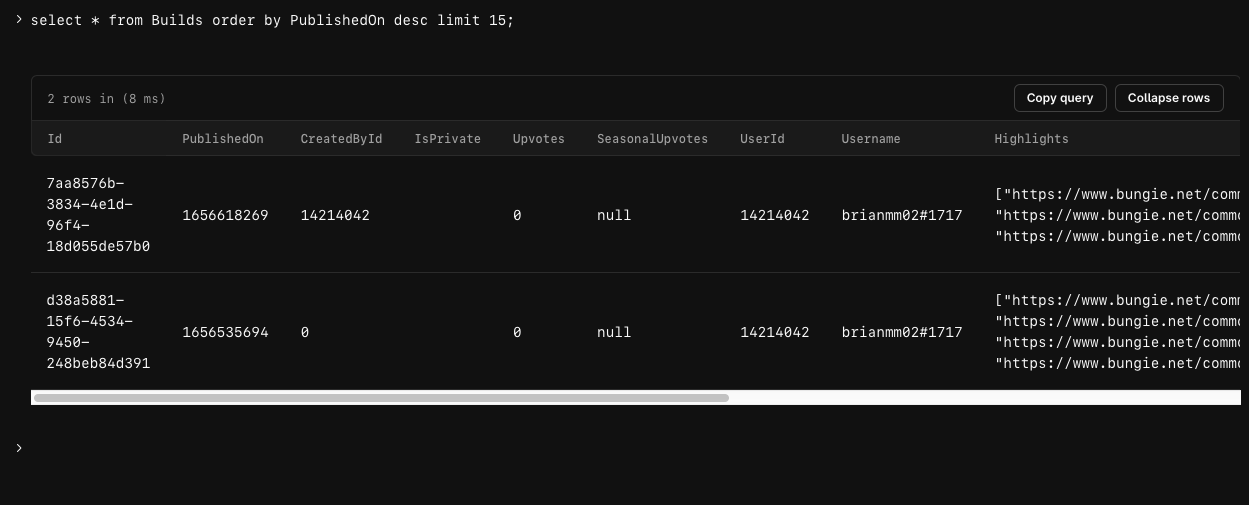

So let’s switch this over to work with PlanetScale now. I’ll add another function to fetch the latest builds, sorted in reverse by PublishedOn, which is the unix timestamp of when that build was created. Here is the query from from the PlanetScale console.

Latest builds in PlanetScale.

This function is a bit bigger than the ones we’ve seen so far, but that’s primarily due to the reason that you need to iterate over each row and append it to a slice before returning. I’m also only returning the summaries here, although that doesn’t change the code too much.

// backend/core/services/planetscale.go

func PSFetchLatestBuilds() ([]dbModels.BuildSummary, error) {

err := connect()

if err != nil {

return nil, errors.Wrap(err, "(PSFetchLatestBuilds) connect")

}

query := "SELECT * FROM Builds ORDER BY PublishedOn DESC LIMIT 15"

stmt, err := psdb.Prepare(query)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchLatestBuilds) Prepare")

}

defer stmt.Close()

rows, err := stmt.Query()

if err != nil {

return nil, errors.Wrap(err, "(PSFetchLatestBuilds) query")

}

defer rows.Close()

summaries := []dbModels.BuildSummary{}

for rows.Next() {

build := dbModels.Build{

Summary: dbModels.BuildSummary{},

}

var isPrivate *bool

var seasonalUpvotesString string

var highlightsString string

err = rows.Scan(

&build.Id,

&build.PublishedOn,

&build.CreatedById,

&isPrivate,

&build.Upvotes,

&seasonalUpvotesString,

&build.Summary.UserId,

&build.Summary.Username,

&highlightsString,

&build.Summary.Name,

&build.Summary.PrimaryIconSet,

)

if isPrivate != nil && *isPrivate == true {

build.IsPrivate = true

}

if seasonalUpvotesString != "" {

err = json.Unmarshal([]byte(seasonalUpvotesString), &build.Summary.Highlights)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchLatestBuilds) unmarshal seasonal upvotes")

}

}

if highlightsString != "" {

err = json.Unmarshal([]byte(highlightsString), &build.Summary.Highlights)

if err != nil {

return nil, errors.Wrap(err, "(PSFetchLatestBuilds) unmarshal highlights")

}

}

summaries = append(summaries, build.Summary)

}

return summaries, err

}

And after modifying the necessary handler, we have great success!

The Latest Builds view after switching the data to PlanetScale.

Summary

So one of my first assigned tasks at PlanetScale has been to essentially explore the platform and get a feel for it. I work much better with real world examples, and since I host a real world product, it only makes sense to use it as a base for working with new services. In general, getting up and running with PlanetScale has been relevantly straightforward. Once the basic database is setup, its working with SQL as you would in any other Go project, which is pretty awesome that there isn’t any kind of fancy new syntax to learn.

Feel free to review the source at https://github.com/GuardianForge/guardianforge.net/tree/dev/planetscale-poc.